Automated Data with Incite

Download this Case Study for Offline Reading [pdf]

Introduction

Manufacturing is a field that is undergoing a complete transformation in the era of faster and more available data. Machines no longer work in isolation but instead transmit their data in real time to centralized monitoring and analytic hubs. Companies who successfully adopt this level of technology will have a substantial edge over their competitors in terms of scrap rate, efficiency analysis, and even thruput. Calculated Systems and Incite Informatics delivered a system that did just that. We designed and piloted a system on Azure that enabled detecting a malfunctioning machine in minutes instead of hours.

Architecture & Solution

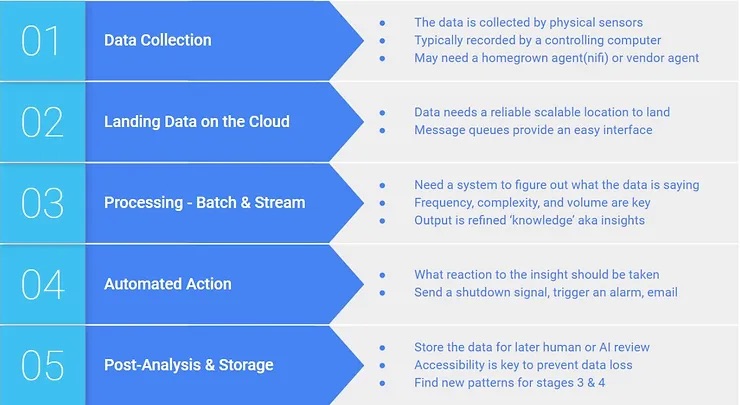

Centralizing and automating manufacturing analysis can be broken into 5 stages:

-

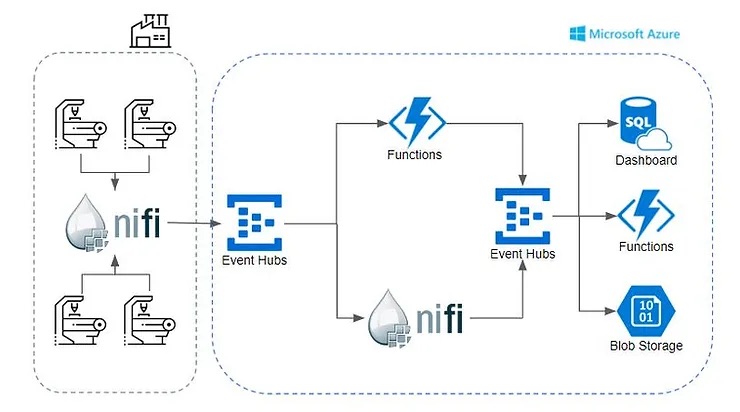

Data Collection This stage is the most dependant on external variables. The specifics of the machines affects storage formats, logging frequency, and even connectivity. Normally this would be a bottleneck in any production pipeline as the hardware teams will need to provide a stream to test the rest of the system. We selected Apache Nifi to be the log transmission agent as it has the ability to serve the production purpose of streaming and the development purpose of simulating a stream. The same architecture and flow(code-SEE HERE) can be used to either as the device monitor or development simulator

-

Landing Data on the Cloud An important principle in designing a reliable, resilient architecture is decoupling ingest and processing. This is particularly true on cloud where scalable resources can be utilized to reduce cost. The goal is to be able to land messages regardless of frequency and then scale processing to match. A message queue or event bus are ideal for filling this role in an easy to use, cheap manner. We used Event Hubs due to its serverless nature and compatibility with the rest of the Azure ecosystem.

-

Processing – Batch & Stream Processing data is a multi-headed problem that requires much consideration. A good rule of thumb for figuring out the right tool is the 3 Vs – Volume, Velocity, Variety. It is important to consider velocity a sliding scale rather than a binary condition of batch or stream. Modern cloud systems consider it a greyscale between ‘instant’ and ‘infinite’. In our case we decided to tap two separate processing engines, Azure Functions and Nifi. Functions is native to azure and is truly serverless enabling processing to be run cheaply and sustainably. Nifi adds a high degree of flexibility and agility but does require a server to be configured.

-

Automated Action The ability to automate actions is the most important step. Insights and knowledge do not help the business unless you know what to do with them. In this case study we were focused on scrap rate reduction. The initial implementation focused on sending an alert to an alert que and trigger an email off that. As the solution enters phase two automated shutdown signals along with suggested remedial action can be added.

-

Post-Analysis & Storage After the events are processed and acted on the information is still valuable. Making it accessible and available enables further analysis and review. This allows further analysis and new processing & actions to be discovered. Following the best practice principles of big data we landed the data without modifying or parsing it. This ensures that the data can be replayed if an error in processing was found. Event hubs has a built in storage connector to blob storage that can be easily configured. We also sent data to a SQL database for analysis. Although this requires more parsing it is much more accessible to the end user.

Download this Case Study for Offline Reading [pdf]

Conclusion

Calculated Systems and Incite Informatics were able to quickly automated data and factory handling. A cloud-first approach enables companies to scale up an entire project in weeks which would previously take months. Cloud platforms such as Azure offer phenomenal building blocks in which the right open source technology can augment existing capabilities. This enables companies to launch sweeping technology upgrades within weeks instead of years.